Memory… Debugging… Go!

What is memory?

Memory is an information storage component in a computer system. It can be both a standalone component (such as a hard-drive device, solid state device, random access memory/RAM…) or embedded within other components such as the CPU (L1 to L4 caches) or in GPUs.

It can be also classified by its volatile property, which refers to the capability of the component to store data while powered by electricity or not.

The volatile memory is one that’s only capable of storing data while being powered by electricity, so once the computer is unplugged, the device loses all of its data, whereas a non-volatile may hold the data permanently, disregarding power.

From the software perspective, we refer it as Physical Memory.

In the beginning of times, even before the initial UNIX timestamp (lol), folks

would interact with physical memory in a direct manner using assembly language,

which is known as asm.

Just to give you an example, the first asm code ever seen in the wild dates

from 1947 in Coding for A.R.C.. by Kathleen and Andrew Booth, whose

predecessor was, well, machine code (binary).

Behold the lovely snippet for a hello world program in x86 Linux-compartible asm:

When we didn’t have complex operating systems, all computers were mainframes. Programs were designed so that you’re directly interacting with all system components such as the CPU, memory, devices, and anything that can be within its reach.

But then, another problem arose: every time someone invented a new CPU architecture, you needed to learn everything from scratch from the perspective of the assembler language: operations, registers, instructions, everything.

Not only that, but computers in general were becoming more and more powerful, and a need to keep track of their usage surfaced, along with more and more needs from companies and engineers. So for once, single-task computing went to multi-task computing.

You can imagine that, at the time, you could run one program at a given time. Just think about it for a second.

So then, folks started to create more complex functionalities in the operating systems to establish some abstractions to make programming friendly and more productive, so that programmers wouldn’t crash computers because they were trying to poke the memory with a stick over a distance.

How memory works

Getting its history out of the way, let’s understand some fundamental pieces of how memory works.

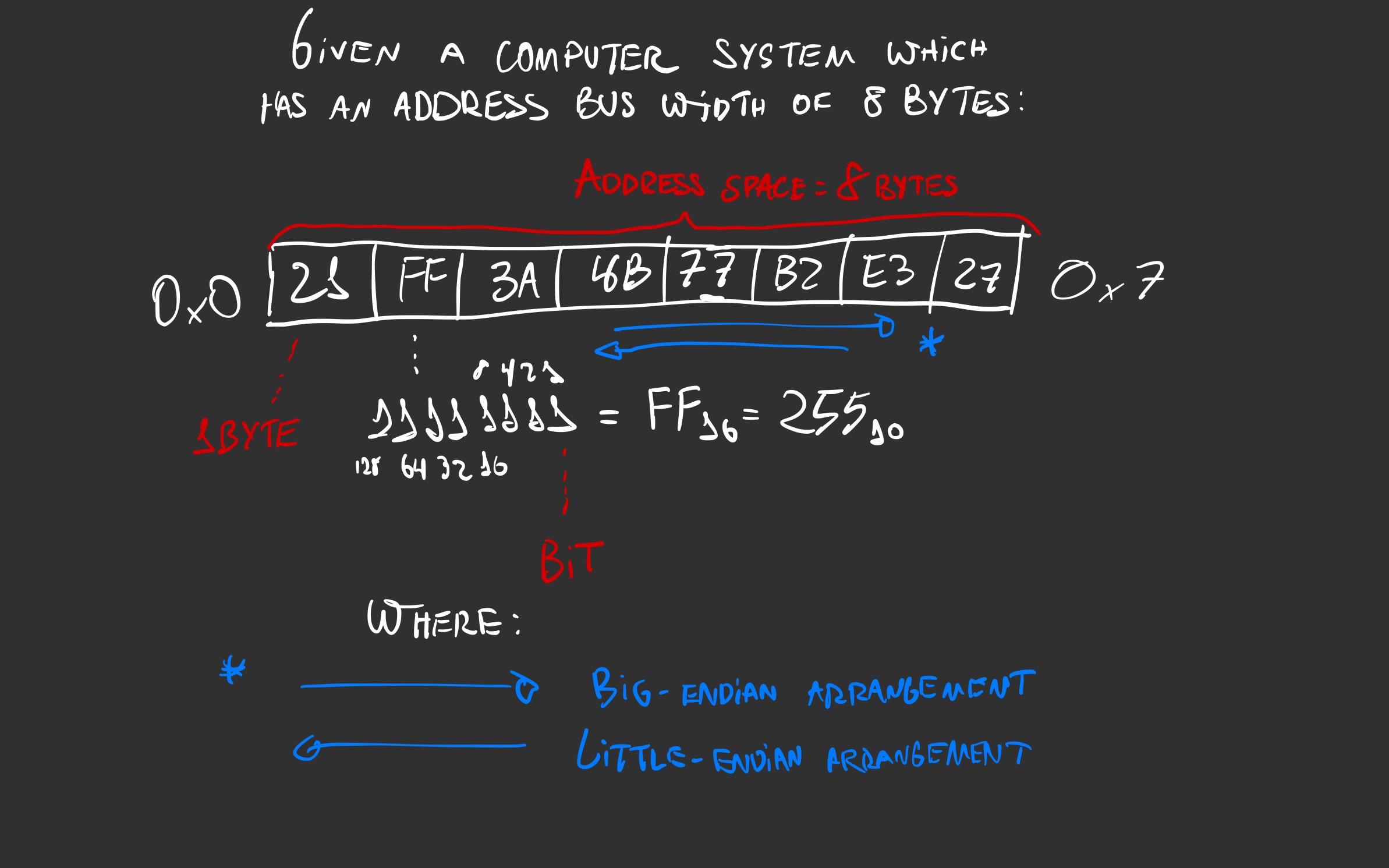

Abstractly speaking, a memory is a sequence of spaces that can hold data. Each memory space holds a byte, which is eight bits. A full memory address can hold up to a word, which may be four or eight bytes (32 or 64 bits) depending on the address bus width, which may be four or eight bytes.

Let’s zoom out further and further.

Given that engineers wanted to run more programs at a given time, it was required that multiple programs could use the same memory space without colliding with each other. Thus, it was required to be easier to use and manage.

So this is how virtualization came to be for us to interact with it in an easier manner, which consists of picking a physical model of a component into a virtual one, like Virtual memory, a memory management technique to give the user an illusion for exclusive use of memory.

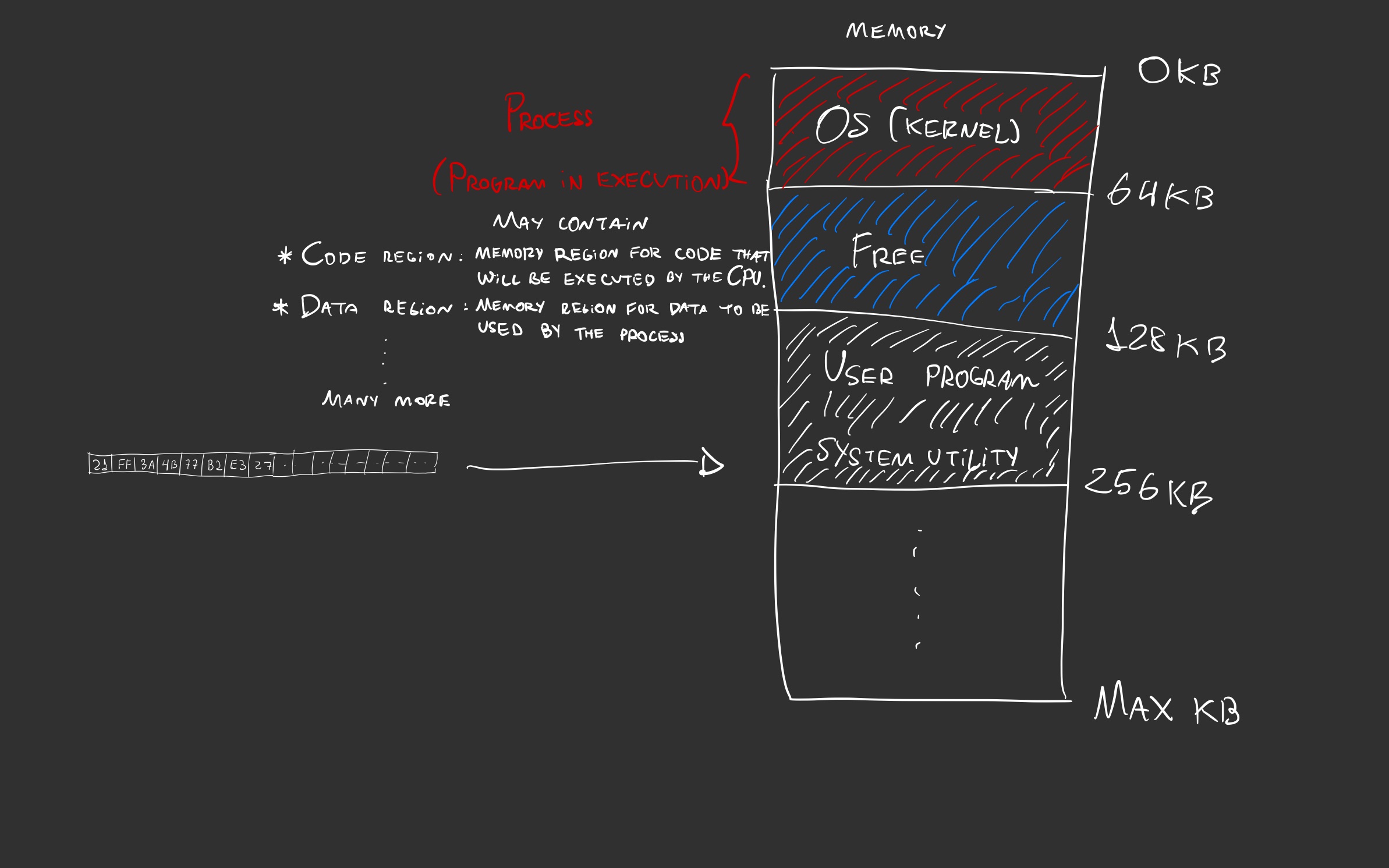

When a program is executed, it’s then called a process, which occupies space in memory for its execution, otherwise, the CPU wouldn’t be able to fetch its instructions.

Inside the memory region it occupies, its code, data, and everything that’s required to function are within. All programs fall under the line, even operating systems.

In the beginning of times, only a single program could execute at a given time, and they’d occupy the whole memory space along with the OS.

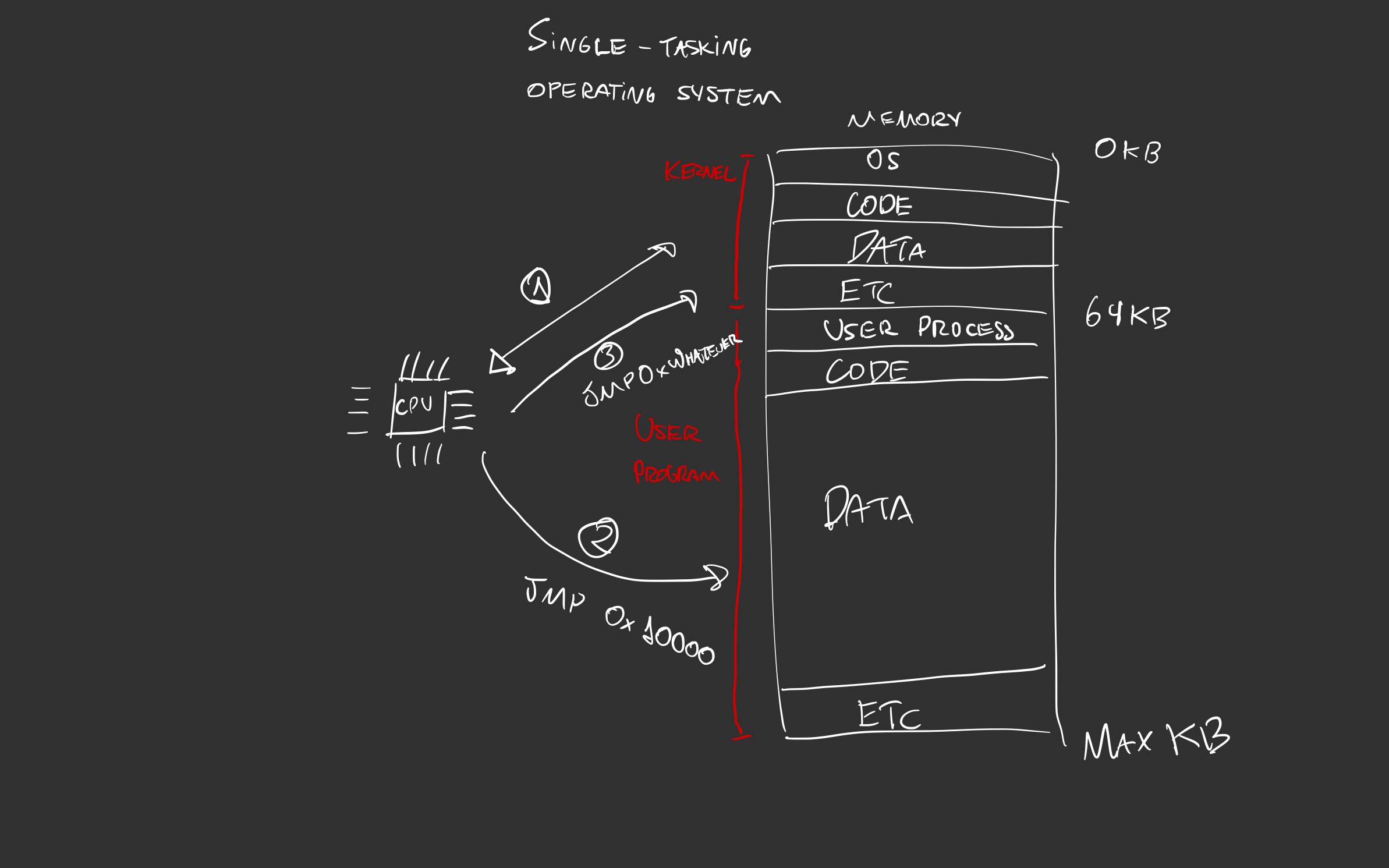

Let’s use this as an example as we won’t be dealing with multi-tasking at this point as it’s going to introduce unnecessary complexity:

In this basic model:

The operating system takes hold of the CPU execution, it doesn’t share it with any other program, it basically executes its own program, nothing else;

If the computer wants to execute another program, the OS (via its kernel) must transfer the code execution to the entry point of the other program. Usually, from the filesystem, the program is loaded to the memory by the kernel, and then the OS would commence the execution. In

asm, you would do that with the commandjmp <address>, in whichaddressis where the code entry point is located1;Whenever the user program is done executing, it gives the control back to the kernel using the same principle:

jmp <address>. In x86 processors, where there’s multi-tasking, an interrupt 0x80 is used to signal the CPU to give the execution flow back to the caller, which is the kernel (I love abstractions so much).

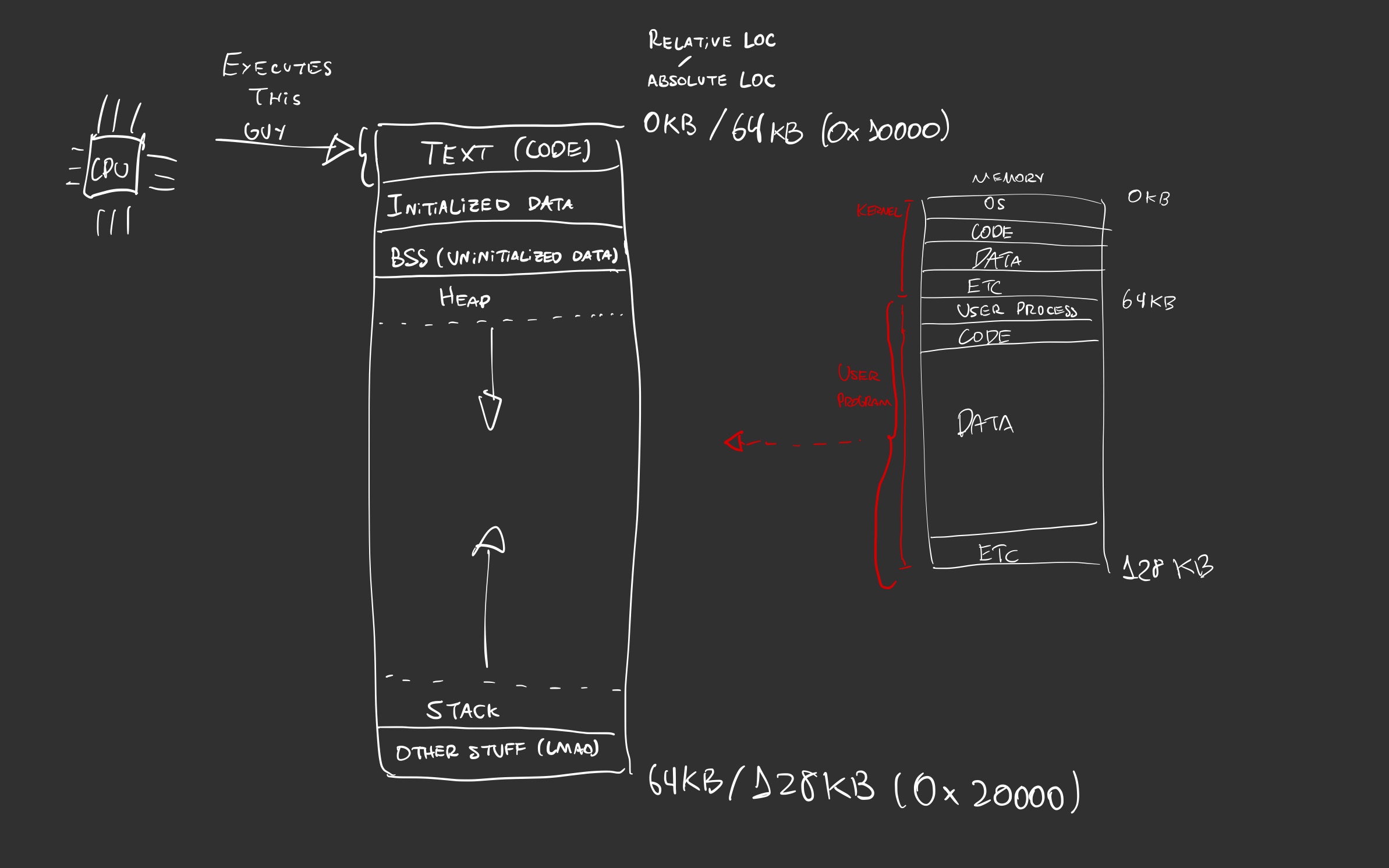

Given the user program was written in C programming language in Linux with x86 arch2, it has the following memory model:

Let’s focus solely on 4 memory regions that the process occupies:

Initialized data

This is a memory region where we store global (static) and/or constant variables that have been initialized prior to the execution of the program. So, what’s that in C?

code

bash input

bash output

Uninitialized data (bss/block started by symbol)

The bss is a memory region of the process reserved for keeping global (static) and/or constant variables that are not initialized with a value upon execution.

It’s not that they’ll keep uninitialized, but they’ll be supplied with empty

values instead. So if you declare a static pointer like the

static char *message, it’ll be just a NULL pointer. Avoid uninitialized pointers

like your life depends on it and you’ll be fine.

Stack

The stack refers to the region in memory that’s been allocated for a process that holds some data from the execution of the program. Things like:

- Local variables that are NOT static and constant;

- Function calls with arguments and returns data (stack frame);

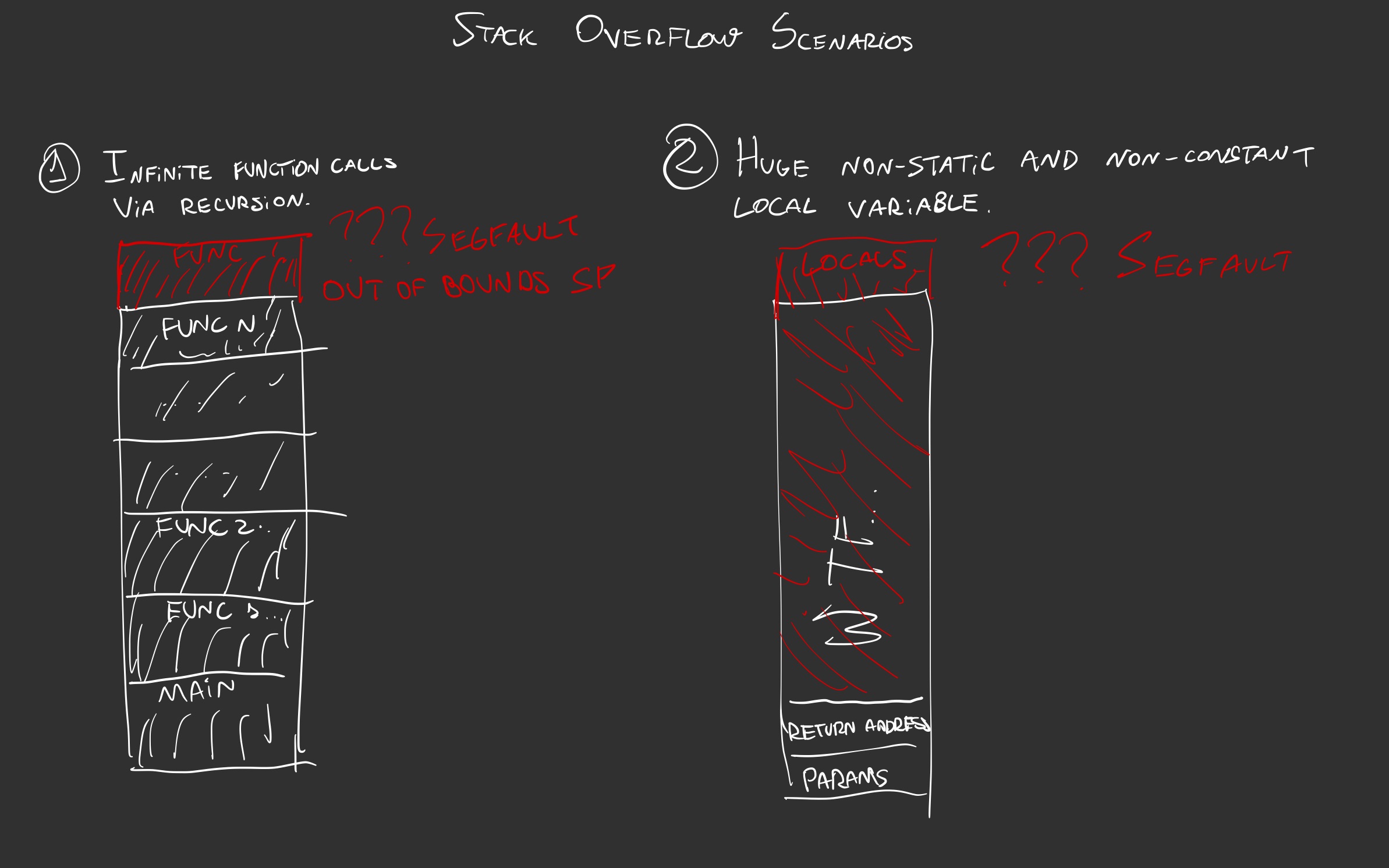

The stack keeps it all in. Fun fact, if you keep calling a function in an infinite recursion, like here:

Or by just trying to instanciate a huge ass local variable, like this array of double:

You’re setting up to have the good ’n old stack overflow in both scenarios!

In both cases, we’re using the stack in different ways: one to call a function, and another to keep local variables.

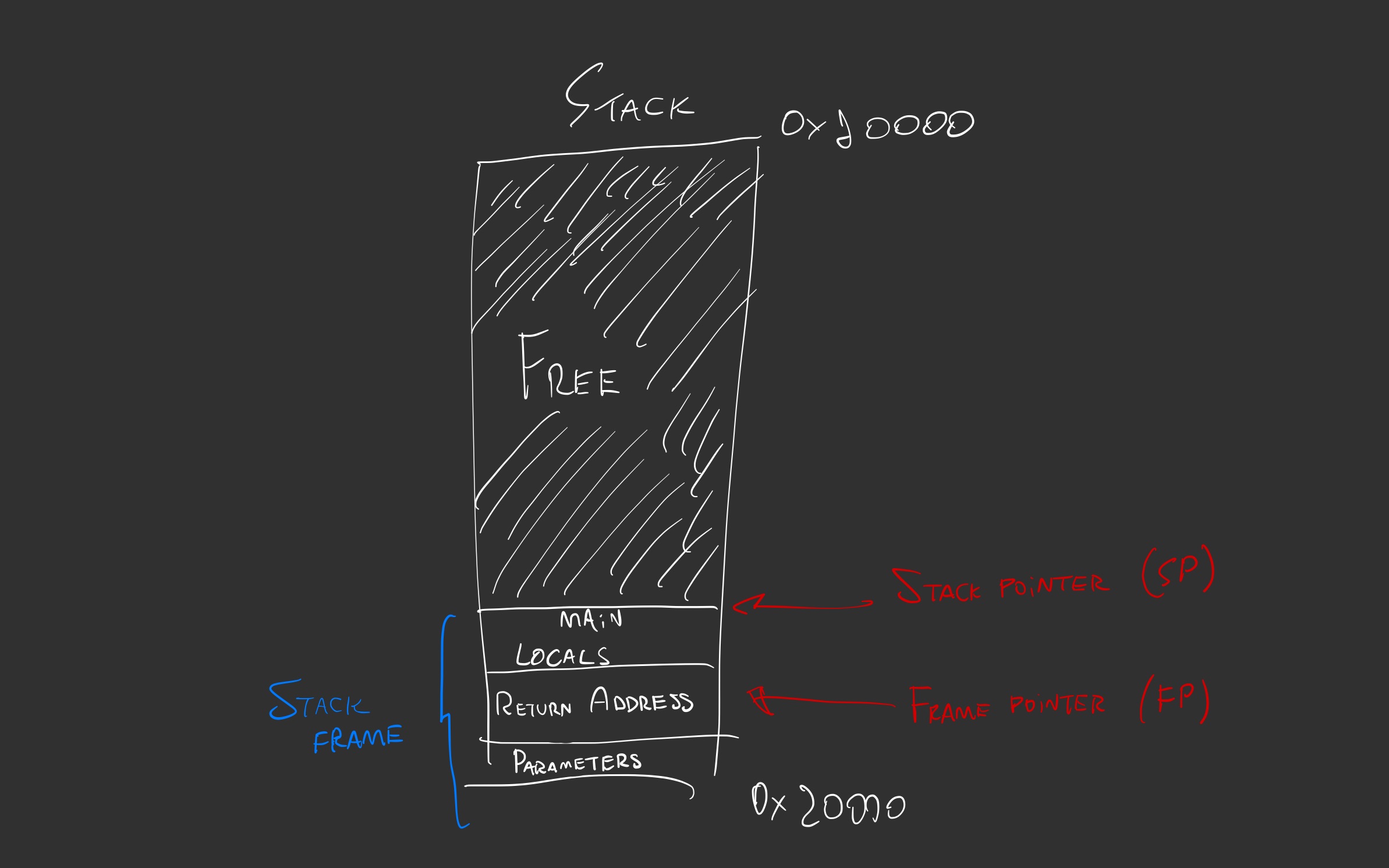

Variables in the stack are static, as its sizes cannot change. Whenever we are running a function, which is often referred to as a routine in this context, a stack frame is pushed on top of the stack as follows:

The stack pointer points to the top of the stack, and the frame pointer points to the frame itself, generally its address.

Conceptually speaking, the frame contains local variables of the function, the return address, and the parameters of the called function.

In the real world, it’s a little bit more convoluted, as we have CPU registers involved in keeping all of the information consolidated.

As of now, let’s see what happens in both cases, where we do an infinite recursive call to a function or keep a huge local variable:

Heap

The heap is a different beast because variables from within can grow and shrink dynamically according to the program execution.

The way that it may be used is by using a technique called memory allocation, in which we order the program to save a bit of memory for later usage, and we use de-allocation whenever we’re done using it. Here’s an example:

The main difference between the heap and the stack is that in the stack, it pre-allocates the memory according to local variable sizes before execution, and in the heap, it’s done at runtime, when a program is running at that instant.

A stack always guarantees that we might be able to create that local variable, however, we don’t have that guarantee while using the heap, hence the NULL check.

Also, it’s important to remember that if we allocate memory, we ALWAYS need to de-allocate whenever we’re done because of a problem called memory leak, which is memory that has been allocated falls out of the scope of the function you’re executing, and you cannot de-allocate it anymore during execution.

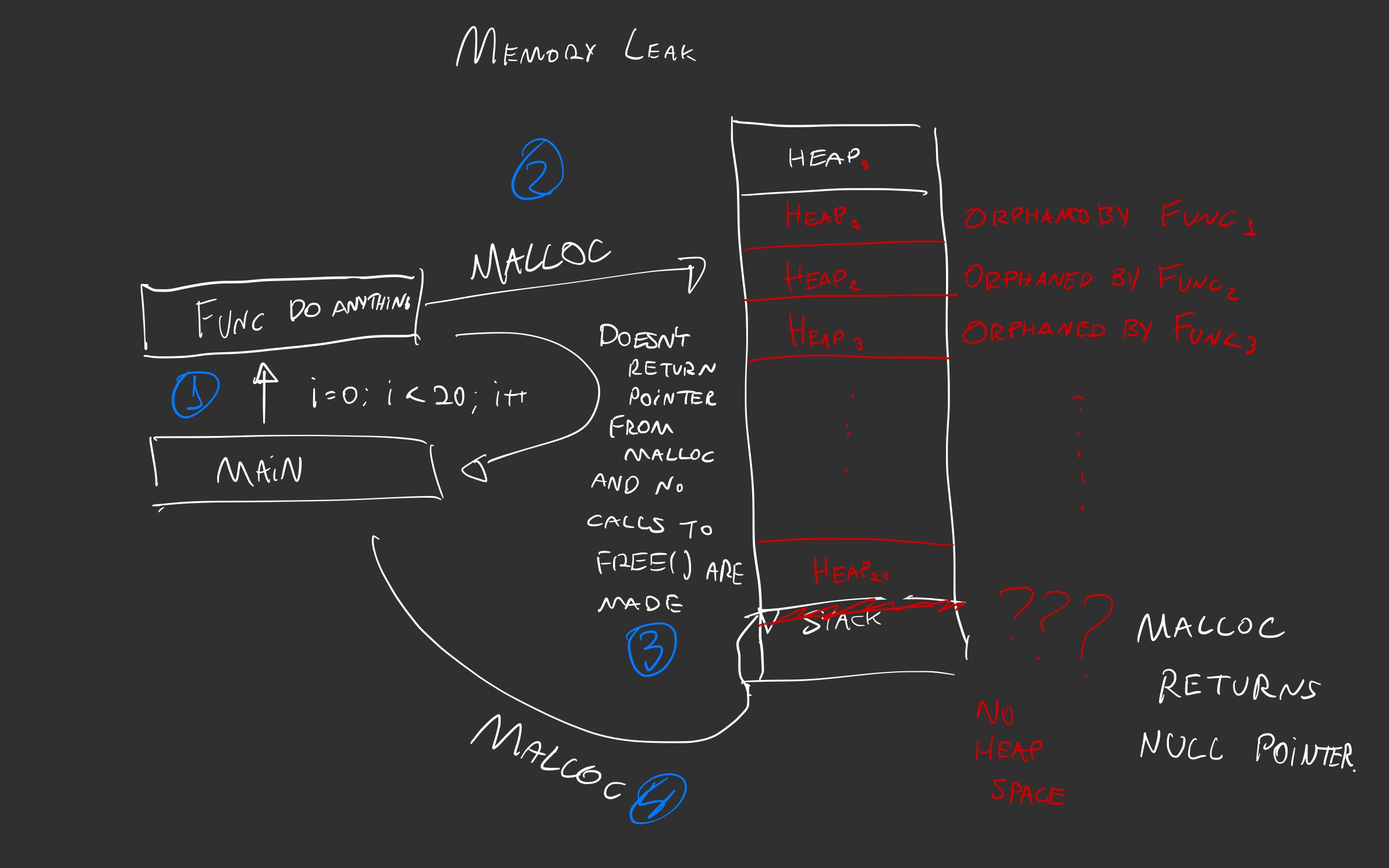

The illustration should show what may happen if we don’t free malloc’d memory:

The main function may call another function that performs something with

mallocin an iterative manner;mallocis called, so the program reaches the heap to allocate some space for a variable with a given size and type;The function returns without giving the pointer back to the caller, or even so, it doesn’t call

free(pointer)to free the resources being used in the heap;If the heap is full, and

mainwants to callmalloc, the function won’t be able to allocate space, returning aNULLpointer.

If you keep on creating memory leaks, memory usage increases over time especially if the code you’re allocating memory in and not releasing gets executed time and time again.

Keep in mind that some programming languages may have a heap space capped, so you can crash a program due to hitting a limit in the heap usage.

We must remember that, usually, memory leaks are not that big of a deal for short-lived programs, because the OS can manage memory really well whenever the program finishes its execution.

However, in long-lived and perpetual programs, it is a big deal. An autopilot program of an Airbus A320 cannot be short-lived.

Go

Go is a programming language that’s designed to be a spiritual successor from C/C++ to generalistic programming with small touches of system programming3.

What I mean by small touches of system programming is that, while it has some relatively low-level API to system components, where you can use pointers, it still has a Garbage Collector, which is an embedded program that scavenges and reclaims memory that has been allocated and it’s out of scope.

The real usage for that is simply productivity to do generalistic programming, when memory constraints are not of a big deal.

Most programs like REST APIs are not in scale; ephemeral programs that execute once in a while; and programs within an environment with unlimited resources.

They don’t benefit from having that amount of control over memory like system programming languages have, like C/C++, Rust, or Zig.

What happens under the hood, though, it’s fairly complex and hides some traps for programmers who are not used to some performance nuances.

Garbage Collection

Garbage Collection is a memory management technique that manages memory allocation, scavenging and reclaiming it back to the OS when not needed under the heap memory region.

At the very least, almost all non-system programming languages have it embedded as a Garbage Collector, so it’s not exclusive to Go.

The real catch is to understand how it works specifically for it. If you want to read the whole ordeal, please refer to the GC guide for Go, but I’ll briefly explain how it works.

GC only works for data that’s under the heap region. So data that’s in the stack will not fall under its management, because when a function returns, stack memory is reclaimed automagically.

Remember the use-case for the heap usage: you want it if you don’t know the exact size that you’ll require for a variable (which is dynamic).

So dynamic arrays (in the case of Go, slices), hashmaps (in the case of Go, maps, or in Python, dictionaries), and all data types being referenced by pointers will fall under the GC management in order to determine its lifetime in a program.

With that said, another thing to understand is the kind of GC being in use, which in Go is the tracing garbage collector.

This kind of garbage collector uses a technique called mark-sweep. To understand how it’s applied, unfortunately, I need to introduce two new concepts regarding data being held in both stack and heap:

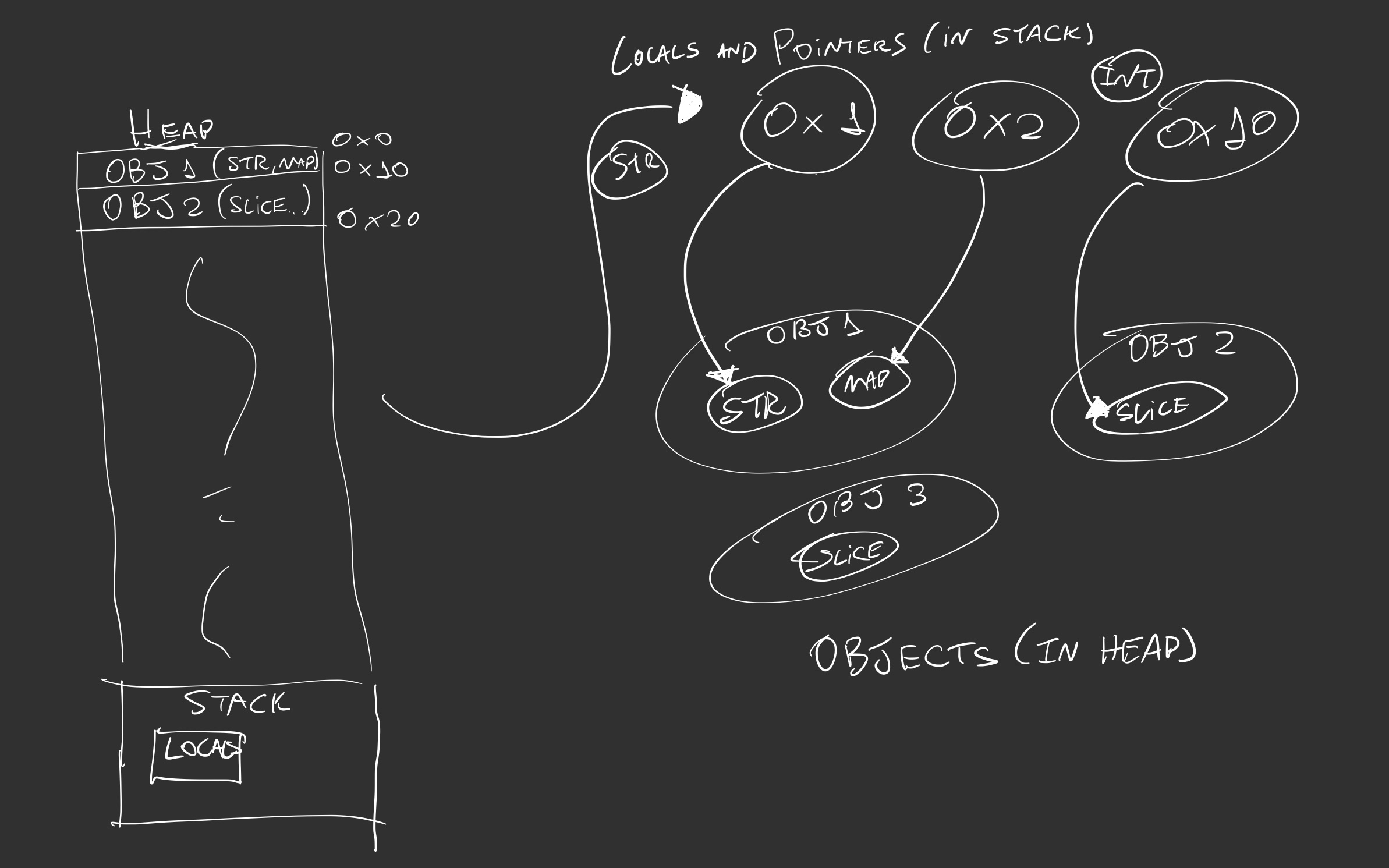

- Object: dynamically allocated memory space which may contain one or more Go values (variables);

- Pointer: memory address of a Go value, usually notated as

*T, where T is the type.

When a Go program is compiled, the compiler can determine the lifetime, or how long a certain variable should exist.

Local variables are bound to be reclaimed as soon as the function finishes. And we know that dynamically sized variables are within the heap.

However, if by any means we have a pointer that… points… to either a statically or dynamically sized variable or two, the compiler doesn’t know for how long that variable should exist, so it escapes to the heap.

Together, the Object and Pointer form an object graph as shown in the illustration:

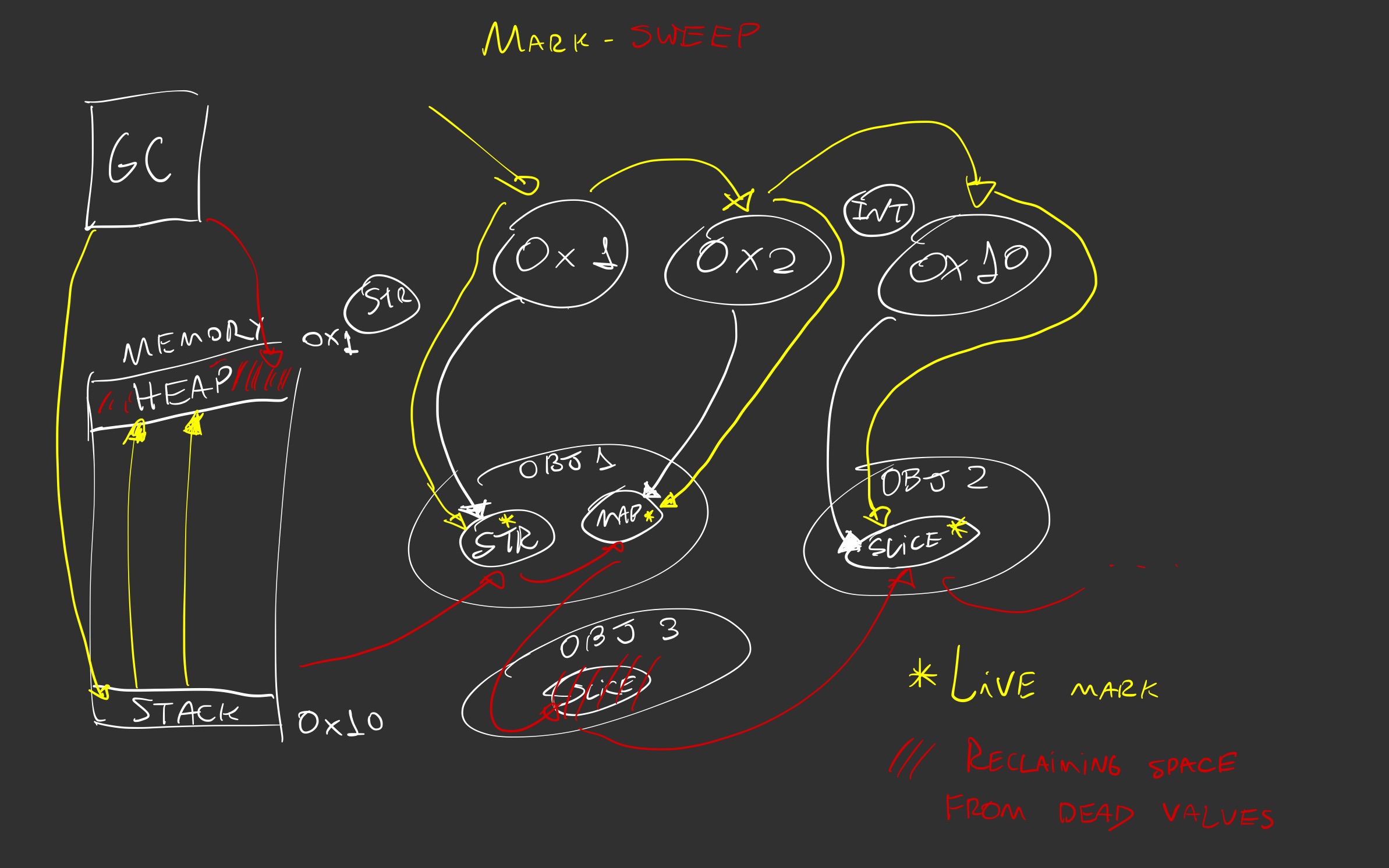

The mark-sweep technique consists of two independent processes from the GC:

Sweep: process to scavenge and reclaim heap space occupied by dead Go values, meaning that they don’t have any reference and might be reclaimed back to the OS.

Mark: process to mark live Go values within objects, meaning that they have some reference on it and shouldn’t be scavenged by the GC. It starts traversing the object graph by those values’ roots: the pointers referenced in the process stack.

Together, this process of sweeping -> off -> marking counts as a single GC cycle. For more details about optimizations and implementation details, refer to the Go GC guide in the sources.

How to debug memory usage in Go

Well, with that said, there’s actually no need to worry about memory allocation and management because with GC, rest assured, you won’t need to do it at all.

However, note that you can still face some issues if you’re disregarding some guidelines in doing a proper optimization in the long run.

Let me list some of the pitfalls that one may fall into:

- Misuse of dynamic slices (buffering records);

- Passing values instead of pointers;

- Embedding structs within structs;

context.Background()’s long-term usage outsidemain();- The excessive number of goroutines.

In a huge codebase, it might be a bit difficult to pinpoint where a leak might be occurring. So this is why profiling is necessary: a process that analyzes a program’s resource usage such as memory, CPU, traces, mutexes, goroutines, and many more.

We have two approaches we can use in order to do so.

runtime.MemStats

The package runtime, as stated

here, interacts with runtime

operations of the programming language, which in turn can expose resource usage,

such as memory. We have two structs that we can use to evaluate its usage:

runtime.MemProfileRecord: This struct acts like a snapshot and a brief summary of the memory usage profile. For instance, you can evaluate the amount of memory being used in the bytes unit, the number of Objects in use, and the stack trace associated with the snapshot.runtime.MemStats: Like the above, it acts like a snapshot, but may show more information in regards to memory usage.

You may use one or the other, they show the same metrics, however, MemStats

may show whether you have a peak memory usage that may surpass the resource

limits you’ve determined for your environment (i.e. 2GiB of RAM usage).

Also, it contains a good amount of GC metrics. For a more detailed view, I’d definitely go with it.

There’s no pre-requisite to use it, you just import the runtime package as it

comes from the standard library.

This snippet shows how we can use it:

- We declare a non-initialized

MemStatsstruct; - We call the

runtime.ReadMemStatsand provide the pointer of the struct; - Then, we print it to a log file.

We repeat the same process every time we want to take a snapshot of the memory usage at some point in the program.

The documentation of this struct can provide in detail what each field represents, but in my own words:

HeapAllocis the amount of bytes being actively allocated in the heap;HeapSysis the maximum amount of bytes that have been allocated in the heap at any time within the program’s execution;StackSysis the number of bytes being used in the stack plus the number of bytes obtained from the OS for any OS threads that eventually may spawn;NumGCis the number of times that the GC cycle has occurred in the program.

We can use this to demonstrate how one can measure how much memory a program can take at a given point. Let’s run the above example real quick:

bash input

bash output

As you can see, the slice allocation took a little heap space, but nothing so dramatic with it.

Since the program didn’t run long enough and no functions were involved with

pointer operations and anything like that, we don’t see GC activity happening,

hence the number of GC cycles being zero in NumGC.

Files/logging are not the only way to do profiling on an application, pprof is

a useful profiling and visualization tool to do that as well.

pprof

pprof is a profiling visualization and analysis tool created by Google, the

same company that created the Go programming language.

It’s mostly written in Go, and its end goal is to create a visualization

representation and analysis of the profiling data generated by the program’s

execution statistics that are represented in profile.proto format, which is a

protocol buffer that exhibits a set of call stacks and symbolic information.

If you happen to have Go installed, there’s no need to install pprof since

it’s embedded within the standard library in the package net/http/pprof. For

more information, you can check its source code in

src/net/http/pprof/pprof.go.

Again, if we want to understand how our programs are misusing memory, we need to understand how to fetch the information from it, and it’s quite simple if you already have a Go project.

How to use pprof

The simplest way is to import the said package into your program, generally,

it’d go into package main along with bootstrapping code within it, and then

you start an HTTP server and use an available port:

The profile.Start(profile.MemProfile).Stop() is optional, if you don’t use it,

it’ll run all profiles described

here.

Of course, since we know what we’re dealing with, we might as well use a single

profiler.

So, how can we use it?

Assuming that you have the following program:

With that in mind, we can start fetching information from the profiler. Remember, we’re exposing the data within a program’s execution via HTTP, so we must use something to fetch an HTTP response out of it.

There are two ways of fetching the data:

- Either use

curlto fetch it fromhttp://localhost:8080/debug/pprof/heap; - Or use

go tool pprofdirectly.

Well, let’s go with the second option!

bash input

bash output

As expected, it’s a CLI tool that you may use to evaluate metrics in the profiler. How can we see the top 10 entities that are taking the most memory in the application?

At this point, there’s no activity happening apart from profiling being served

by the application, however, what happens if we make a slice instance with

millions of uint64 elements, which takes eight bytes each?

Well, so main is taking that much space in the heap alright… Also, take into

consideration that every time you run the pprof tool along with the server,

you’re taking more heap space as well:

What the hell, 400MB increased over a few debug requests.

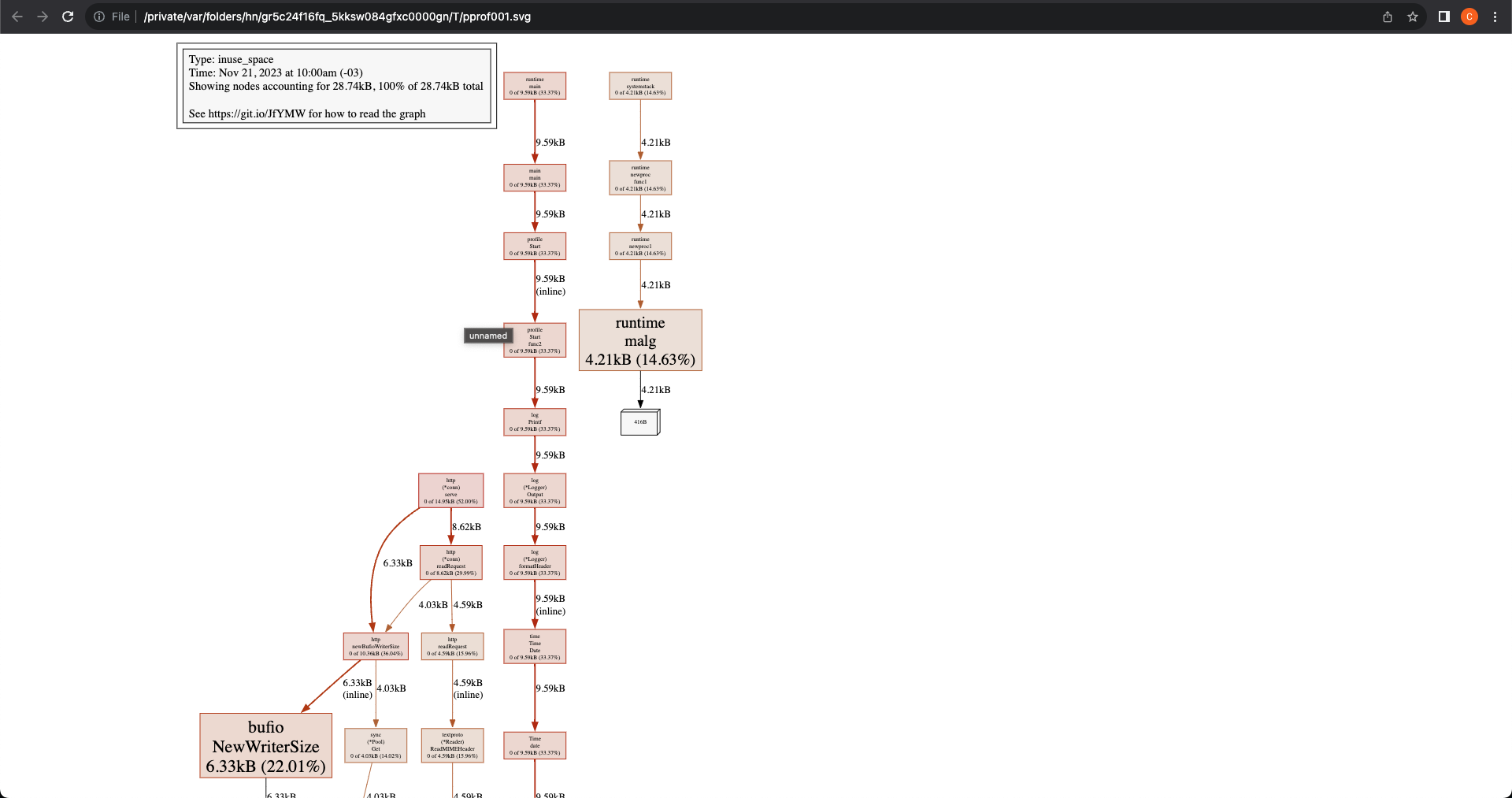

So let’s revert the example back to simply running the web server. As it’s a visualization tool, how can we visually see how much memory is being used by the application in each function?

We can use a graph mode! There are multiple ways to visualize it, but you can

mostly use the web browser to show you the .svg file.

The web directive in pprof will open a web browser so you can see the graph

image:

To comprehend the graph, here are some guidelines:

- Check the

.svgheader to know what the graph is about. In this case, it’s indicatinginuse_space, which refers to space being used in the heap; - In the same header, you can see how much the graph accounts for the total space being used by the application. In this case, the graph represents all function calls (100%) of the application;

- Each node in the graph represents a function call, and each function call can keep an amount of space being used, accounting for a percentage in that 100%;

- A function may call another function, hence the graph connection between

nodes in a unidirectional manner. In this case, the

mainfunction calls another function that cascades a set of functions required to start the profiling server;

Here are the options you may use with the web command:

n: limits the number of nodes to show in the graph;focus_regex/ignore_regex: limits what can be shown in the graph.

With that said, one can see what’s going on with the application’s memory usage at a given time.

Now, let’s see how one identifies these issues happening in an HTTP server program.

Optimization scenarios

I’m going to present two scenarios that may be good for basic optimizations:

- Misuse of dynamic slices (buffering records);

- Embedding structs/slices within structs;

Reasoning

The reasoning behind the optimization for applications that need to scale is that HTTP servers are programs that aren’t expected to quit.

In the heap section, I explained how memory leaks occur in a programming language that doesn’t have GC embedded. Well, memory leaks may occur in GC-embedded languages in different ways, as I’ve stated above.

Given an HTTP server written with the following snippet:

We may call the API using curl and assert performance with pprof like the

following:

bash input

bash output

And here’s the pprof report:

Calling the /hello endpoint results in 1MB of heap usage over time, but

remember that the GC may act up to reclaim dead values.

Let’s see some of the scenarios and how to optimize them.

Issue

An array, in the Go language context, is called a slice and it may be declared

with a fixed size (which pre-allocates memory and leaves empty spaces with nil

conformant values in place) or dynamic size (which doesn’t), like the following:

The catch here is that, by themselves, they’re all dynamic from within. You can

keep appending values by using append() and then it’ll double its capacity

until it hits the max again:

The ease of use of a slice is not an understatement, it’s pretty flexible. However, just because it’s cheap, doesn’t mean it’s free[^fn5].

For example: imagine that I work in a government office and I’m building an

internal API. What happens if I try to pull ALL records of a Person and try to

expose them all at once?

Assuming that 3,500,00 is the whole of Brasilia’s population (my home town), we’re fetching and keeping it up in memory all the people.

Does pprof have anything to say?

Hmmm… 244 megs… Not bad, how about the whole population of Brazil (210M approximately)?

Wow, holy shit. Good luck in trying to find an affordable infrastructure to keep your API running this way.

Remember: even if you have a good computer to keep this huge amount of information, a production-grade infrastructure, even Kubernetes, won’t be able to keep up with this without destroying itself trying to arrange resources.

But let’s go back to our 3.5M population example. So it means that buffering structs and embedding slices/structs is taking a toll on our API, making it store 244MB. How can we fix that?

Use pointers within the slice

The first optimization is to use pointers instead of pure structs within a slice. So instead of storing, like, 30B, you’re storing just 8B.

Remember: given a pointer is 4/8 bytes, if a single struct, along with its fields, has a smaller size than that, it’s not even worth keeping them as pointers.

Let’s see how it goes, shall we?

Yup, that’s a HUGE improvement! From 244MB to 19MB in heap usage. Still, it’s not enough, how about making the embedded slices to store pointers as well?

Well… not worth putting the Parents as a slice of pointers, because they

need to have their Parents assigned as well.

So a Person without Parents (that’s some kind of a tasteless joke, but

wasn’t intended) is lesser than 8 bytes, so Parents values may not be worth

putting as pointers.

But I still have one more thing under my sleeve.

Use channels instead of buffering with slices

Channels is a mechanism present in Go which you can pass data across different parts of the code (functions, goroutines, etc).

How’s that useful?

Well, let’s see how it goes.

The querying function cannot be inside that handle function, so let’s isolate that and use channels.

A little explanation before moving to our execution:

- We extracted the logic of our querying outside our handle;

- The query function will return a read channel;

- At the same time that our

handlePersonwill be reading the channel, we launched a goroutine to be passing the values to the channel we just returned from ourqueryPersonfunction; - Do NOT forget to close the channel once it’s done to avoid hanging the iterator.

So with that, we’re keeping only one Person in our memory at all times, which

results in the following:

This is soooo useful when dealing with huge amount of data that you can’t possibly store in memory.

Parting words

So we learned a bit about memory and how to debug it in Go programming language.

I hope that I could show you a thing or two with this, and I’d like to encourage you to go even further on what I explained, and also cross-check any additional references.

Also take a moment to read the sources that I used for this post, as they contain a lot of valuable information and top-notch knowledge that may be useful to you as it was for me. And generally, these folks are smarter than I am.

Happy learning, and happy coding! =)

Sources

- W. R. Stevens and S. A. Rago, Advanced programming in the UNIX environment, Third Edition. in The Addison-Wesley professional computing series. Upper Saddle River, New Jersey: Addison-Wesley, 2013.

- R. Bryant and D. O’Hallaron, Computer Systems GE, 3rd ed. Melbourne: P. Ed Australia, 2015.

- R. H. Arpaci-Dusseau and A. C. Arpaci-Dusseau, Operating systems: three easy pieces. Erscheinungsort nicht ermittelbar: Arpaci-Dusseau Books, LLC, 2018.

- A Guide to the Go Garbage Collector

You can see this example in OS development tutorials when coding a bootloader. Generally, entry points are determined by the standard of the binary being produced, like the ELF (Executable and Linkable Format) from UNIX-like systems, where the entry point address is described at offset

0x18in the file header callede_entry. Read it 4/8 bytes, and you’ll know where your program must start. ↩︎Programming languages may or may not have different memory models, same with operating systems and CPU architectures. Some of them are designed inspired by another language/system. See below. ↩︎

Go’s memory model is low-key inspired in C++. See The Go Memory Model for more information. ↩︎